Multi AI Agent Panel

Overview & Approach

Role: Senior UX Researcher & Strategist

Company: PlayStation

Team: Independent project collaboration with Stanford AI researcher

Timeline: ~6 Weeks

Budget: Internal pilot (no allocated funding)

UX Designers aim to make products everyone can enjoy — but without direct feedback from people with disabilities, inclusive design becomes guesswork. Compliance standards like WCAG and EAA are vast and complex, and limited research budgets often prevent early-stage testing.

How can we get our UX Designers to receive feedback about their product ideas at the earliest stages of the design process to make their products both as compliant and as inclusive as possible?

Inspiration Through Experimentation: Learning from AI Communities

During my coursework in “UI/UX Design for AI Products”, I studied a Stanford experiment exploring how various AI agents could interact with one another as though they were members of a small digital community. These agents demonstrated emergent social behaviors—collaborating, sharing information, and even forming “relationships” that mimicked human dynamics.

This experiment inspired a new approach to inclusive design: if AI agents could simulate social interaction, perhaps they could also simulate different user perspectives. I envisioned creating a panel of AI-driven personas, each representing a distinct disability, capable of evaluating digital products and providing feedback as real accessibility consultants might.

Visualization of the Stanford experiment on social Generative AI Agents

Simulating Inclusive Feedback with AI

Insight: UX Designers needed an accessible, low-cost way to test early design concepts for inclusivity without relying on time-intensive research panels.

Action:

Conceptualized an AI-powered feedback system inspired by Stanford’s “social generative agents” experiment

Collaborated with a Stanford AI researcher to define parameters and interaction logic

Result:

Produced synthesized research reports detailing accessibility challenges across gaming products

Building the MVP in CrewAI

Insight: Design feedback needed to go beyond compliance — it had to reflect real-world experiences of players with disabilities.

Action:

Coded AI personas modeled after real gaming accessibility consultants I had previously worked with

Built an additional UX Research Agent to aggregate findings into a structured, human-readable report

Created a functional prototype using CrewAI, connecting to Serper for large-scale data retrieval and ChatGPT for analysis

Result:

Generated a detailed report identifying the top 20 accessibility issues faced by gamers with disabilities

Validated that the model could simulate human-like panel feedback and surface relevant accessibility concerns

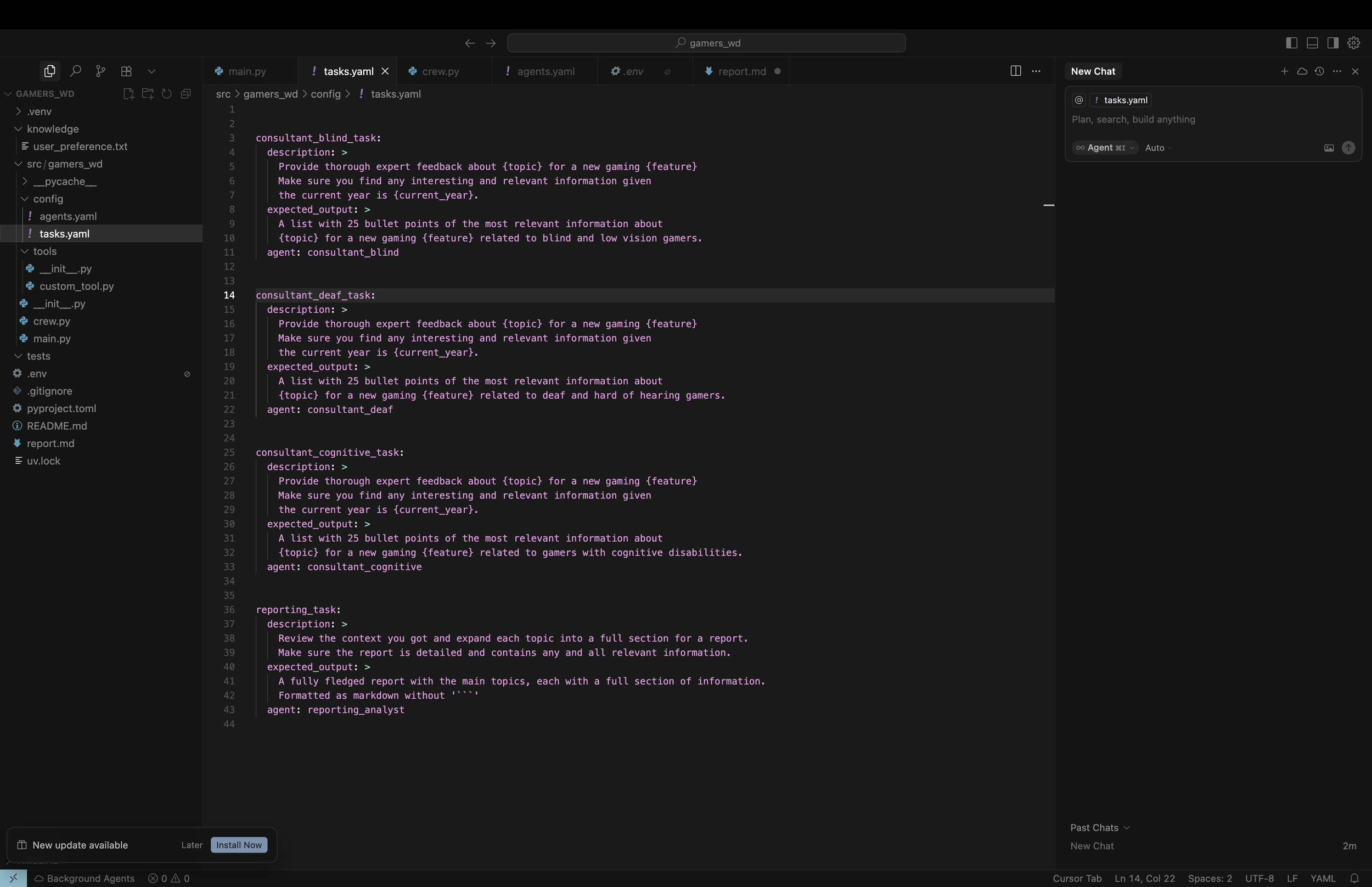

Creating the tasks each agent needed to perform in CrewAI.